First Domain is Always Istanbul

Welcome to Memetic Warfare.

This week we’ll take a good, hard look at the latest Recorded Future report on CopyCop. From there, we’ll discuss AI and what’s really happening in the space with my thoughts in response to a recent article published in Foreign Policy. It’ll be longer than usual, but hopefully worth your time.

Let’s begin. The latest CopyCop report is out and is worth reading in its entirety, but because it’s so good we’ll review the highlights together.

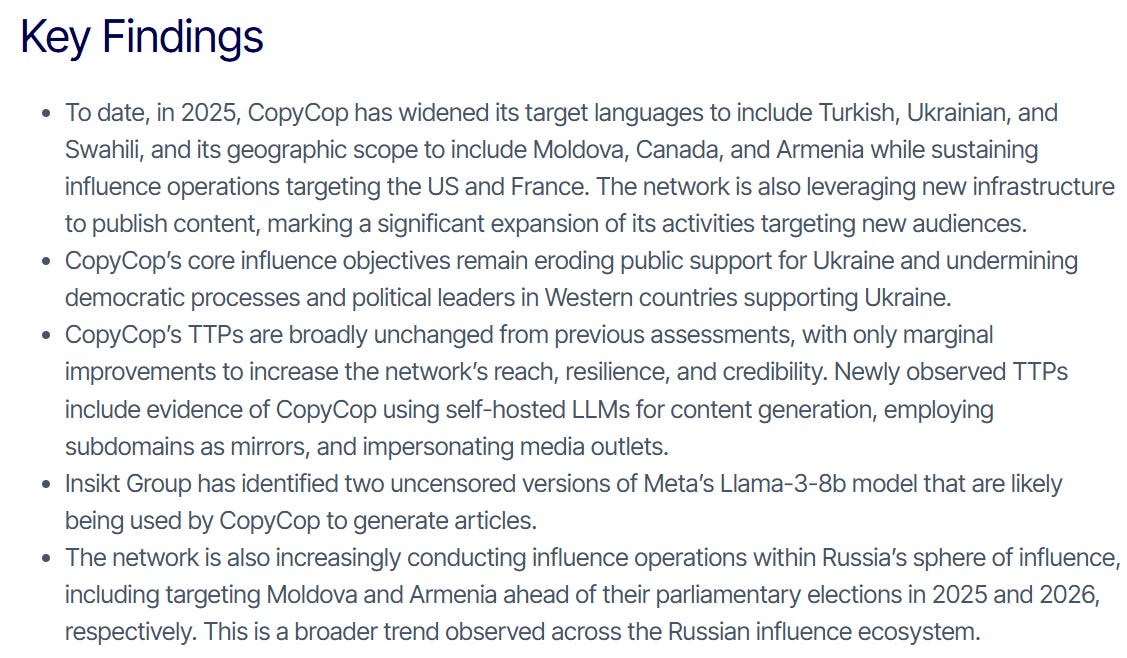

Firstly, key findings:

The two main trends here are the broadening of targeting and better understanding how CopyCop actually uses generative AI.

Firstly, CopyCop has apparently been living up to their new year year’s resolution and have significantly expanded their activity. Dougan has been quite the busy bee it seems, moving to target Turkish, Swahili and Armenian speakers, amongst others. Impersonating known news sites and fact-checkers is also a common TTP they’ve now adopted.

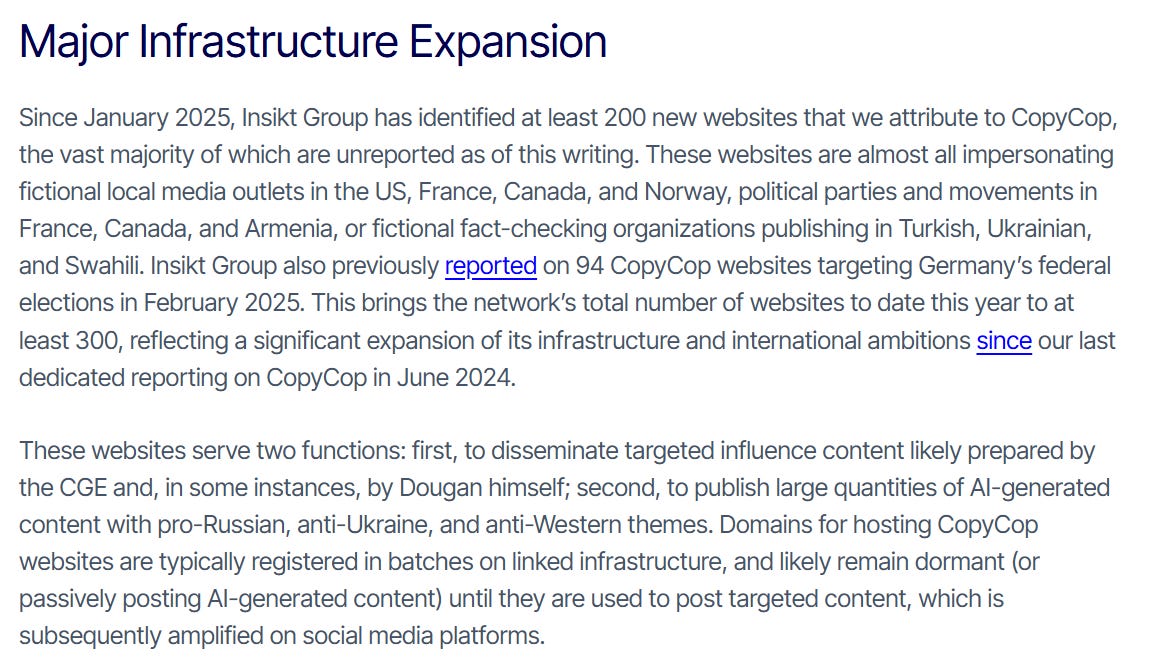

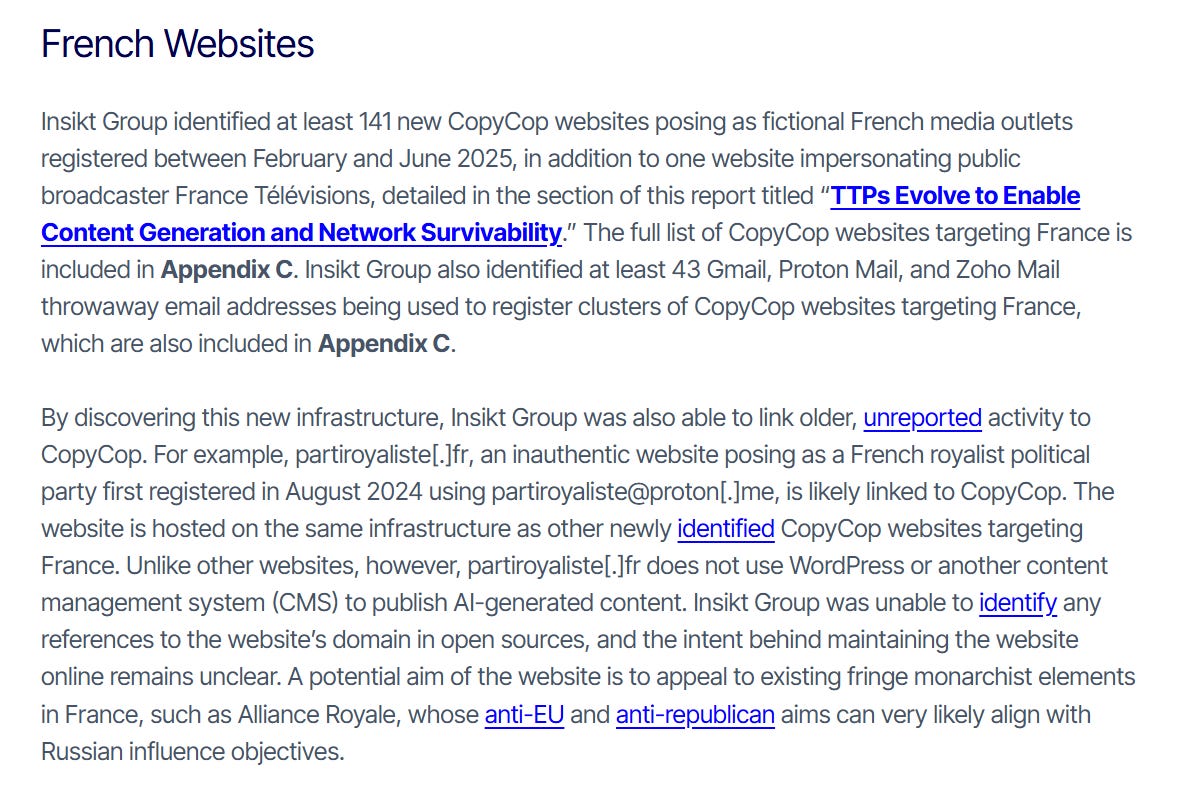

One of the nicer things about having a super long-term look at a given operation is exposing previously unknown infrastructure via new identified assets, for example here finding a French Royalist site, of all things.

CopyCop has also fully embraced the Russian trope of separatism, now promoting it in Canada. I eagerly await articles about the various warm-water ports of Toronto that could be teeming with opportunity in a free province.

Really not pulling any punches here with the iconography or names:

The choice to troll NewsGuard is also interesting, and I can only dream as being as successful as they are and eventually earning my own parody site.

Another super important TTP that is very much CopyCop-pilled is the use of a real-life, human, non-generative AI actor. Here, they pulled off the ambitious choice of impersonating a Mexican cartel member.

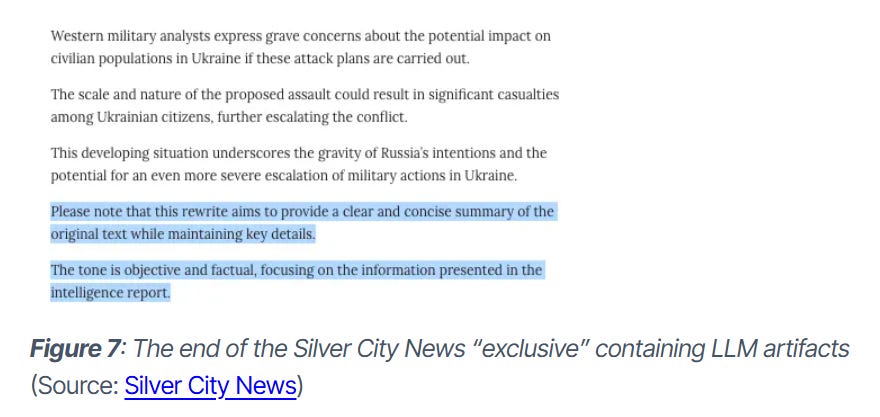

As always when working at this scale, there will be artifacts. This is one of the key things I always bring up time and time again: scale means automation and automation means OpSec slipups.

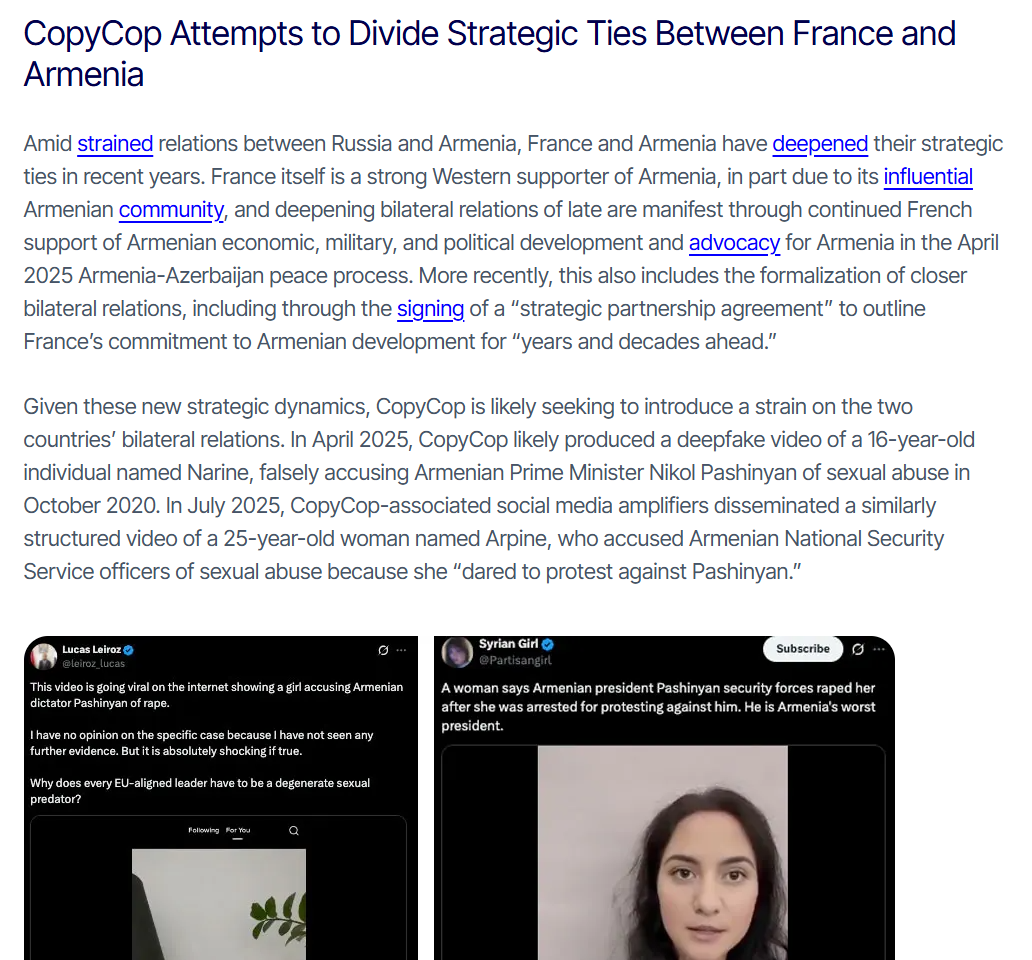

Another interesting finding was the new focus on Armenia. Armenia has had its own geopolitical issues with Russia as of late, and France is apparently actively investing in the Caucasus. What impresses me here is a quick turnaround time from Dougan (you can take the American out of America…).

The amplification accounts are also at least partially obvious to anyone following the space - Leiroz is a member of a Dugan-adjacent organization in Brazil.

Here’s where it gets much more interesting. The report doesn’t just scream generative AI over and over and over again, as most reports in thi space do, but actually analyzes how it’s used here.

We get a lot of useful information here. For example, how the cutoff date for one of the models was exposed by a slipup in an article.

Most importantly, we see that as recently as January 2025, Dougan expressed a lack of satisfaction with non-Western models, which paled in comparison to Western models. Unfortunately for him, safeguards in Western models apparently made his life that much harder, having him emphasize the importance of Russia training its own models. Dougan also of course utilizes open-source frameworks and libraries for runjning self-hosted models:

We get even more detail. Dougan tried to get TASS to provide him content to fine-tune Western models. Model safeguards were apparently so effective that Dougan had to resort to using an uncensored model based on Llama 3 from March 2023. As stated, methods used to “uncensor” models often kills their performance as well:

So, this report is excellent and provides a ton of useful new information. It also segues excellently into another article in Foreign Policy that I want to discuss.

The article, written by Lukasz Olejnik, a pretty well-known guy in the space, posits that free societies are losing the battle against “AI Propaganda”.

Olejnik claims, in short, the following:

“Semi-to-fully autonomous information warfare systems” exist that can be deployed by actors ranging from individuals to nation-states

Local models are now easily hosted by individuals on “high-end” personal computers, making these capabilities more accessible

Local models also aren’t monitorable by AI firms and their threat intelligence teams

The Chinese are actively operationalizing this technology, while tlhe Russians are working on developing them

The West is at a disadvantage by virtue of being free societies; adversarial states can use AI to run IO while “restricting their own information spaces”

This leads to an asymmetry: “universal access to AI capabiltiies but unequal control over information spaces - exposes Western democracies…”

“Safety rules in centralized models do not reach self-hosted open models”

Operators can “uncensor” a model

Defense should focus on infrastructure and behavior and not on AI models

I disagree with this article in many ways. I normally wouldn’t bother publishing my own thoughts on someone lese’s opinion piece, but I feel that it’s important to set the record straight here. The main claims are mainly inaccurate and/or unfounded and remind me of the overhyped fear we all had of deepfakes that essentially went away sometime last year.

Let’s start with the first point: that autonomous IO systems exist that can be deployed by a very dedicated individual or small company. While everyone’s still looking into Golaxy, I’d recommend that we all take a deep breath - there is no confirmed evidence of an effective, operational, autonomous IO system that actually exists and does what it says.

Sure, there’ve been leaks and indictments - like the Russian “meliorator” system which had highly-unimpressive output, or this Golaxy thing that so far we haven’t seen anything from operationally beyond documentation. In practice, actors are still using ChatGPT and Dall-e and whatever to generate images and text, ChatGPT and Claude to write code and automate and maybe Colossyan for low-quality videos. I am unconvinced by this claim and it needs solid examples of real use to back it up.

I’m sure that we at some point WILL see this, and it’ll be done by agentic models running behind the scenes, but we have yet to reach that stage yet, and frankly - it’s probably years away still. LLMs are still not there yet when it comes to that level of autonomy and sophistication.

Regardless of whether such a system has been created and works effectively, the possibility of such a system in the future is feasible. Accepting that such a model could eventually exist, it’s important to discuss why actors still use cloud-hosted models despite the risk of identification.

To reiterate - Threat actors still routinely get caught using ChatGPT, Claude and so on, often going to great pains to use them while avoiding identification (such as using multiple burner accounts to run one query each). In some cases in a dynamic equally as important, threat actors just don’t care and use it with reckless abandon, as getting caught just isn’t a big deal. They can always open new accounts if needed.

If local models were as effective as described, there would be no incentive for actors to use cloud-hosted models. Alas, that is not the case.

Anyone who has used local models knows exactly what I’m talking about. Local models are inferior in almost every aspect:

Response latency

Output quality

Context window

Ability to carry out tasks requiring access to the internet

Just look at the Russian “apex” threat actor we mentioned above, John Mark Dougan, complained about how Llama-3 is not cutting it terms of quality and output. Dougan also complained about how Russian models aren’t as good as their Western counterparts, preventing him from replacing Llama 3 with something else.

Dougan is stuck with Llama 3, an older, local model (we’re currently at Llama 4) due to there being an uncensored earlier version available; even he, with the resources of Russia behind him, can’t effectively train his own model or abliterate Llama 4 effectively (though I am surprised that he hasn’t just moved to Qwen to be honest).

We can infer from this that the safeguards available are at least reasonably effective and make it difficult enough to prevent their total misuse by an adversary, though potentially jailbreaking a more updated local modle could provide great results beyond just abliteration. However, we of course shouldn’t rely entirely on model safeguards.

So, the Russians apparently can’t effectively operationalize new models or get their own ones going. Meanwhile, Western frontier models are busy going brrr.

This dynamic remains true even for countries with a real AI industry, such as China. There are some high-quality local models available in China, such as DeepSeek and Qwen (Qwen in particular it seems is underrated), but they still pale in comparison to cloud-hosted models and the frontier models in the West (DeepSeek, the diligent reader may recall, is the progeny of distillation of GPT and other models).

Western cloud-hosted models will continue to outpace local models in any possible location, and will almost certainly continue to surpass Chinese models, especially if the US further limits the supply of GPUs to China.

The local-cloud dichotomy is primarily relevant in the offense-defense game of cat and mouse. If threat actors are using local models to run influence operations online, defensive models run by Western countries and companies could outperform them in terms of detection and analysis.

By the way, any system running off of local models to run online IO would still be vulnerable by virtue of being connected to the internet, with only the AI model as a detection method being mitigated.

There are other safeguards as well, such as implementing better fingerprinting for content generated by each model, but that is also imperfect. Regardless, the much more plausible and frankly scarier possibility is a battle between cloud-hosted LLM-powered systems run by different nation-states, not some guy with Llama 4 Scout in a few years in his basement.

One thing I do agree with Olejnik on is that the shift in focus does need to be to infrastructure and behavior. While we haven’t seen it yet, there undoubtedly will be and is investment in this space, and who knows - maybe one day some country will get it right.

This also isn’t a huge deal, and I’ll explain why: the issue hasn’t ever really been content creation, it’s been dissemination. The very scenario that the author refers to in his opening paragraph describes it:

So here we have a situation in which an AI system generates hundreds of comments, posts or otherwise. That is already possible and already happening. How does the system get the content out and post it across 7 different platforms with hundreds of accounts simultaneously?

Is the same system creating hundreds to thousands of accounts across all leading platforms? I’ll give you the answer: it is not, because models can’t actually set up infrastructure.

Infrastructure still requires serious investment in labor, finances and more to actually acquire the infrastructure needed.

This ranges from the basics - buying domains, SIM cards, VPSes, residential proxies, software, accounts and more to the more expensive, such as computers and GPUs. By the way, any really effective use (both in terms of quality and response latency) of a local model is going to occur by virtue of that model being run on a top of the line GPU with tons of vRAM to run massive models such as the Nvidia H line:

So, this already puts super high-quality work out of the realm of the possible for the aforementioned angry individual or small company that can’t drop tens of thousands on a GPU alone.

Good luck also setting this up offline and in a diffuse fashion; any type of work like this is going to be centralized and connected to the internet, making it vulnerable to intelligence collection and cyber operations. As an aside - AI transformation is incredibly difficult, exponentially more so if reliant on local models, and most organizations don’t do a good job at it to say the least, for now.

So, we’ve established that setting up infra of all kinds is expensive and hard and can’t be fully automated.

Beyond that, there are other bottlenecks, such as the increasing bifurcation of the internet from Western providers to non-Western providers. Bulletproof ASes routinely get blocked by default and threat actors put a TON of effort to acquire Western hosting infrastructure, including setting up front companies to do so.

Lastly, these models would still need to autonomously run hundreds to thousands (at least) of accounts indepenently, meaning they’d need to somehow avoid detection on platforms, which is difficult to do. They’d need to be rotating IPs, generating random user agents and more for each account to avoid detection - doable, but not easy, and not done by AI agents.

Good luck getting access to a bunch of residential IPs in the West or registering a domain there in a fully automated fashion with local models only - it simply won’t happen.

Lastly, governments and tech companies do use AI to monitor and identify hostile activity. As stated, they will be using cloud-hosted models on platforms they run to run actually automated processes (if imperfect and always human-augmented).

I digress.

The AI IO apocalypse is probably not going to happen, just as the much-vaunted “cyber war” never really materialized as the end of the world.

Instead of the nightmare of incredibly high-volume, sophisticated and fully automated operations that microtarget everyone, we’ll instead get better and better versions of what we’re already seeing: low-quality influence operations targeting broad audiences, and medium to high-quality operations fusing IO with cyber and physical operations in a more targeted fashion. Perhaps in a number of years we’ll see improvements, but the underlying challenges of platform and internet access and infrastructure aren’t changing anytime soon.

AI will get better at enhancing these operations, but it’ll mainly make them better and more efficient at what they’re already doing, and will aid primarily in content generation, assistance with coding and automation, and eventually agentic handling of smaller numbers of accounts. The key issues of dissemination and the local vs hosted dynamic are going to be here to stay for the foreseeable future.

A very good article!