Welcome to Memetic Warfare. Some quick notes prior to starting - Telemetryapp.io reached 17,000 registered users this week, so if you haven’t checked it out yourself, why not try it out now? The free plan provides 5 searches a day.

We also will be releasing a massive update for the analytics and other features in the coming days, so I’ll announce that officially next week when released.

On that note, let’s begin. We’ll kick this week off with Open AI’s latest report on IO and cyber, available here.

Let’s begin with the “key insights” below:

The report focuses on what else but elections:

The next section is of interest and shares insight into how threat actors actually use ChatGPT. ChatGPT comes in, unsurprisingly, after basic infra is set up but prior to deployment of assets - essentially, it aids in the setup and enrichment of infrastructure for the most part.

This “intermediate position” that AI tools play in enabling cyber and IO provides AI firms with serious insight into both threat actor infrastructure as well as their other assets, and even TTPs and more - enabling AI firms to identify “previously unreported connections between apparently different sets of activity.”

As per the report, the recent Open AI/Microsoft investigation into an Iranian covert influence operation (which I also looked at here and at the FDD with Max Lesser) showcases this. Open AI shared network information with Meta, who then used it to confirm ties with one Instagram account affiliated with the original network to past known Iranian activity targeting Scotland in 2021, showing that the two campaigns were linked.

Thomas Rid called out the next paragraph as being key, and I agree. AI firms have and will continue to have for the foreseeable feature unique insight into threat actor activity in almost every field of cyber.

The situation we’re currently in is a bit reminiscent of blockchain and crypto in the early stages. Criminals and threat actors flocked to use blockchain due to its unique capabilities without thinking of the potential OpSec violations inherent in the use of it.

Over time, blockchain has become an incredible tool used by law enforcement and governments to investigate malign activity thanks to its transparency.

We may be approaching an inflection point for threat actors as well on this. Generative AI provides low level threat actors, and anyone really, with amazing capabilities and tooling for automation, development and anything else they could potentially want. However, it comes with risks currently:

Platforms can detect their activity

Using these tools can inadvertently expose additional information on threat actors - i.e. file metadata for uploaded content, code, session information and more

These risks will only grow as AI firms get better at detecting their own content - imagine a case in which a threat actor sets up a broad influence operation with ChatGPT, only to have all of their content flagged with a high degree of accuracy as having originated from ChatGPT and thus actioned. Open AI already does this in their reports, and they will get better over time.

Another point that Rid brings up is critical as well. Other firms, such as Anthropic, are growing in the market. The above trends may not occur as effectively as actors can move between various tools, which also makes it harder for their content to be flagged. This could be handled as well by some sort of shared detection or flagging mechanism, but that remains to be seen.

Back to the report - let’s see what threat actors are currently doing. There are multiple actors in the report, so we’ll only focus on a few.

The first that we’ll look at is the decidedly un-1337 Cyb3rAv3ngers. Attributed to the IRGC in the past, Cyb3rAv3ngers is known for attacking exposed critical infrastructure in the past while maintaining the facade of a hacktivist front. They were detected thanks to a “tipoff”. Cyb3rAv3ngers is known for targeting PLCs, and apparently needed help from ChatGPT to do so.

Interestingly, much of the recon work here would have been handled by a search engine earlier as per Open AI. I wonder just how effective ChatGPT was for them for this specific activity, as it seems to me that they could have had similar productivity with less OpSec exposure by just using a search engine.

Open AI shares the prompts used also, which is super cool (even if paraphrased) although I still find LLM ATT&CK funny for some reason.

Overall some pretty basic questions for the most part - asking for a mimikatz alternative, lists of common PLCs in Jordan, scanning a network and more. Nothing here that a skilled threat actor shouldn’t be able to do him/herself, and again I have to wonder here about the ROI and risk of using ChatGPT.

Another Iranian threat actor also tried to use ChatGPT and was found out thanks to a “tip from a credible source”. Raises the evergreen point that without tips and information sharing, and even with them, it’s never possible to detect all threat actors.

Pretty straightforward but pretty cool to have this insight. There’s some more specific information as well:

Love the names of the packages as well exposing Persian, and below exposing more information on additional targets:

Overall, very cool stuff. The final point that I’ll bring attention to is that this rare insight into threat actors also shows how threat actors think and act. I am a firm believer that many IO and even cyber threat actors are primarily occupied with ways to impress their higher-ups and land that promotion, and the finding below exemplifies that. Having outside proof of the quality and breadth of your operation shows to your organization that your work makes headlines and is valuable! Always a great way to land a bigger budget.

From here, we’ll move on to research from Checkpoint on Russian interference in the Moldovan elections in what they deem “Operation Middlefloor”. Middlefloor is an email-centric influence operation targeting Moldova (and others) with emails bearing forged documents and other disinformation content.

One example below of a document sent to Moldovan officials about Moldovan accession to the EU, including a link to a fake email on a malicious domain for information-gathering:

As a note - here we see the use of a copied signature as part of the forgery. Using signatures from other documents is a common forgery tactic, and something we’ve seen from Russian actors in the past as well.

I’ll share the key points of the article below:

This is an interesting operation in that it primarily utilizes emails, similar to operation overload. I don’t recall similar cases of Chinese or Iranian actors using email as their primary vector, so could be an interesting Russia-centric TTP. The threat actor has also targeted others that align with Russia, such as the 2023 NATO summit, the Spanish general elections and Poland in a general sense.

While email is a fascinating vector, it has its downsides as Checkpoint brings up:

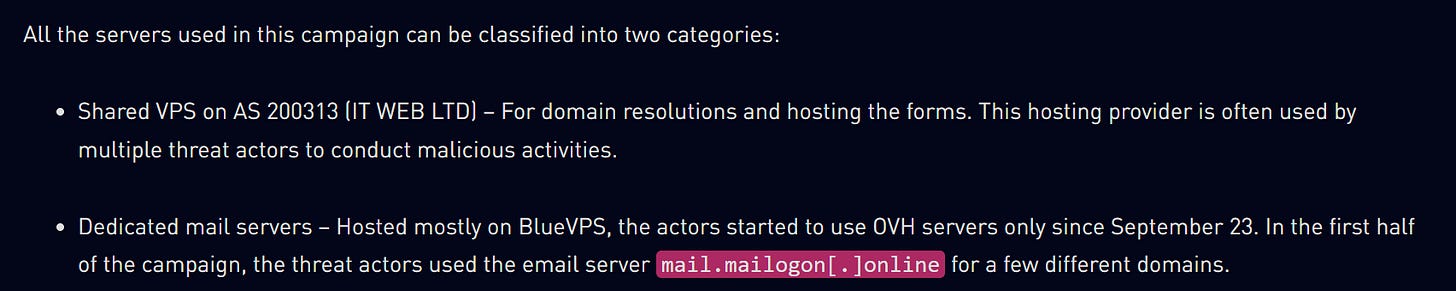

Good on Checkpoint also for categorizing the types of servers used - not often that we get to do deep dives into mail servers as part of IO investigation:

There’s more information and a lot of IoCs to pivot on and investigate for those who want to, so I’d recommend checking it out.

That’s it for this week - as always, check out telemetryapp.io and direct all jokes and complaints to the commentssection.