MWW: Message in a Newswire (Haixun’s Version)

Welcome to Memetic Warfare Weekly!

My name is Ari Ben Am, and I’m the founder of Glowstick Intelligence Enablement. Memetic Warfare Weekly is where I share my opinions on the influence/CTI industry, as well as share the occasional contrarian opinion or practical investigation tip.

I also provide consulting, training, integration and research services, so if relevant - feel free to reach out via LinkedIn or ari@glowstickintel.com.

I’ll be in Singapore teaching next week, so this will be the last MWW for the next two weeks are so.

Anyway, let’s get this week going:

Message in a Newswire (Haixun’s Version)

Mandiant is back at it again with their reporting on Haixun, in this case breaking some unprecedented ground.

To summarize, Mandiant has found that newswire PR services, alongside 32 subdomains (interesting) of legitimate US news outlets, affiliated with a US company named “FinancialContent, Inc”, serve as “dissemination vectors” for Haixun content. This is a classic case of information laundering, although I personally am unaware of any other precedents of using newswire/PR services as the specific vector.

It should be stated that Mandiant doesn’t claim that Haixun owns or is even directly tied to the vectors themselves, and views them as “distinct entities”.

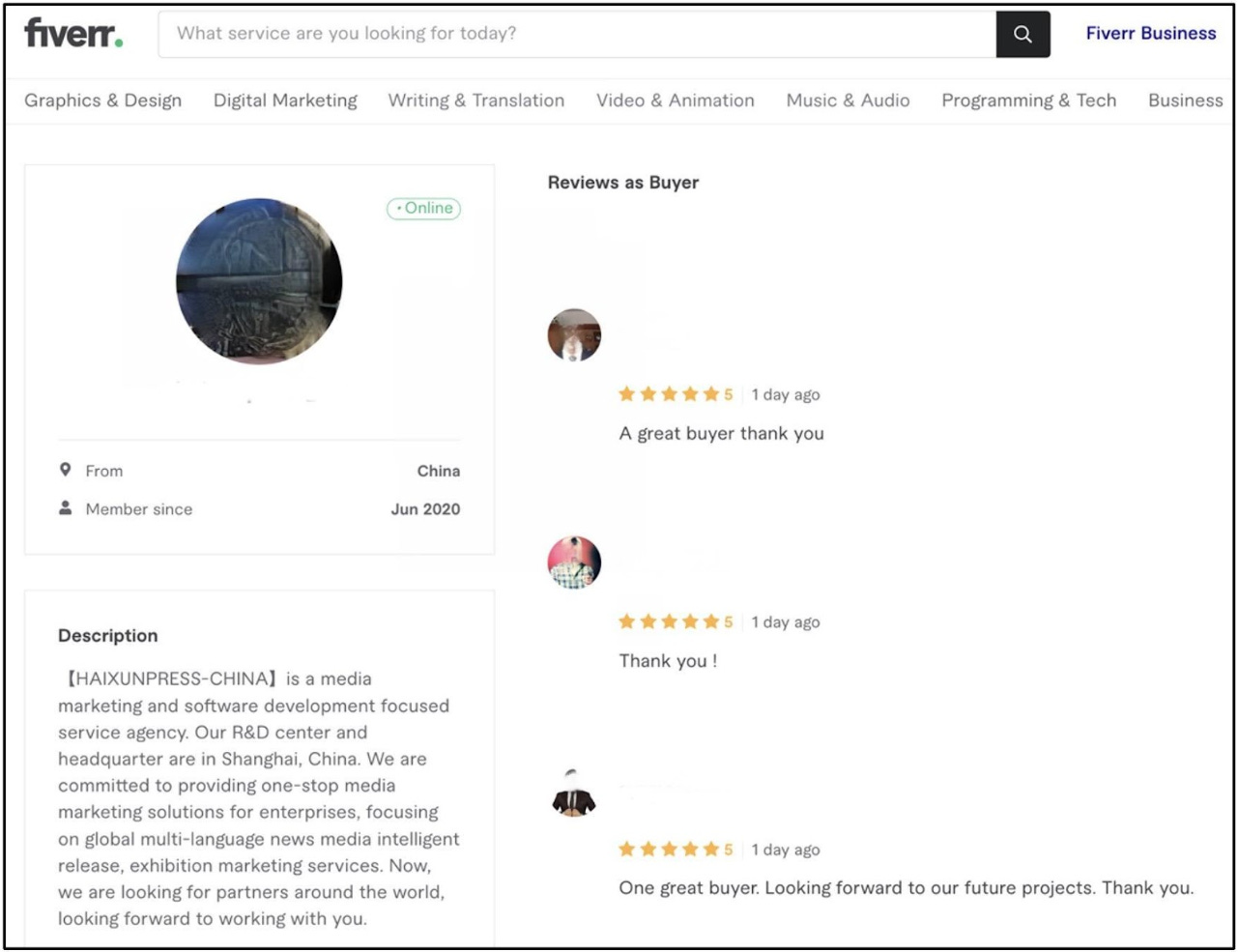

The other notable thing ot keep in mind here is the exploitation of Fiverr as a platform to hire freelancers to promote content

Unsurprisingly, these entities were promoted by inauthentic accounts on multiple occasions.

Lastly, Mandiant claims that there may be some evidence that Haixun operators have been involved in hiring physical protests in Washington, DC, again hired via Fiverr - see below.

There’s more here, and I don’t want to rehash the whole report, but it certainly is interesting. Let’s see how Mandiant’s coverage of Haixun develops over time.

Red Star Over Canberra

ASPI, the Australian Strategic Policy Institute, has a few of the most consistent publishers of research on Chinese IO, specifically Albert Zhang, Danielle Cave and several others. Zhang and Cave published two reports on a new Chinese operation targeting Australian domestic politics, available here and here.

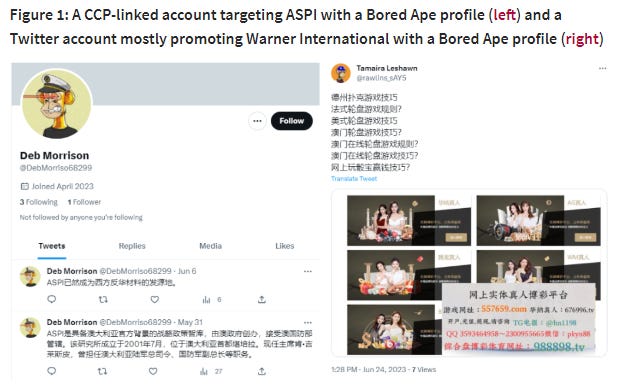

The first part of the report focuses on the operations targeting domestic Australian politics, including ASPI itself. Notably, approximately 70 accounts were identified on Twitter that impersonated ASPI’s official account - an increasingly common TTP.

There are some later traits, such as the use of content targeting Guo Wengui and Yan Limeng, the use of AI-generated images and so on that ASPI uses to attribute the operation to China’s MPS - personally, I’m not so sure. The aforementioned traits are very common among diverse Chinese operations, so this is comparatively weak attribution.

While the report claims that teh operation shows signs of becoming more sophisticated, multiple elements of the operation still indicate a low level of sophistication and OpSec:

The one below is arguably the best:

The second part of the report claims that these Chinese operations utilize purchased accounts affiliated with organized crime groups in southeast Asia, linked to Chinese “transnational criminal organisations”. Arguably worse than engaging with criminal groups, these accounts even promoted NFTs and utilized the “Bored Ape” profile pictures.

ASPI was able to attribute these accounts to suspected criminal organizations because these accounts overtly promoted “Warner International”, a known illegal Chinese gambling platform active in southeast Asia. ASPI posits two potential explanations for this overlap:

Direct Chinese collaboration with criminal organizations on influence - not without precedent, as pointed out by ASPI.

Overlap in the outsourcing of work done by Chinese government agencies.

I’m inclined to believe that it’s more the second, as this sort of low-level operational security is more commonly seen with outsourcing IO as it saves time and money.

The main point brought up by ASPI is that the handling and response to IO by democracies is still limited and in many cases caught up in internal bureaucracy. While this is very much true, the ability to formulate and implement a cohesive response is also difficult fundamentally, as we’ll discuss later.

Waltz with Vladimir

In today’s Israel affairs corner, I won’t go into the unprecedented political turmoil in Israeli society but will rather bring up a different topic more germane to this blog: Russian information operations targeting Israel.

I’ve discussed Operation Doppelganger and the recent spike in Russian IO activity targeting Israel, which has sparked some discussion in Israeli national security circles. The main question being asked, like in many cases of IO being exposed, is what is the victim doing about it?

In the case of Israel, foreign IO falls under the purview of the General Security Service (the Shabak or Shin Bet although no one really calls “Shin Bet” here). The lack of public response from the Shabak as a general matter of policy has led many to think that they aren’t actually doing much about it, and in my opinion raises the question as to whether or not it’s best to leave IO to clandestine security agencies and not lightly or even declassified, open-source oriented bodies.

I’d also like to ask an open question: can we trust that secretive government agencies are even capable of identifying and investigating IO effectively? In these cases there was plentiful open source reporting about the existence of these operations, and we haven’t received a number of other cases in which the government has exposed covert foreign IO without such IO being previously exposed by others. I’d be inclined to say that most government agencies globally aren’t capable of investigating online IO effectively at scale for a multitude of reasons.

Leaving that aside, the Israeli journalist Nadav Eyal tweeted that Israeli intelligence officers contacted their Russian counterparts after noticing an expansion of influence operations, demanding that the Russians cease these operations.

Google Translate has some misses there, but the point gets across.

I’m sure that the Russians were very receptive to this and plan on changing their operational strategy. Leaving jokes aside, This raises multiple questions as to the efficacy of responding to IO and “below threshold” activity in general and deterring such activity.

In many cases, taking concrete action against IO, beyond naming and shaming and perhaps limited indictments against specific individuals, is riskier than doing nothing. Responding with a cyberattack, IO or other activity may lead to an unwanted escalation of the situation without necessarily deterring the instigator. Additionally, the operational and practical cost of actually doing so may be greater than the cost of the original operation, raising questions as to the efficacy of carrying out an operation.

Essentially, this is an open question: how does one actively deter threats from carrying out IO? There’s no one clear answer, and there may well never truly be.

This Looks Like a Prompt for Me, so Everybody just Inject Me

This week, it’s finally time to lean in to the industry trend and talk about LLMs for a few, because why not. We’ve had some interesting LLM news in the past few days/weeks, so let’s review.

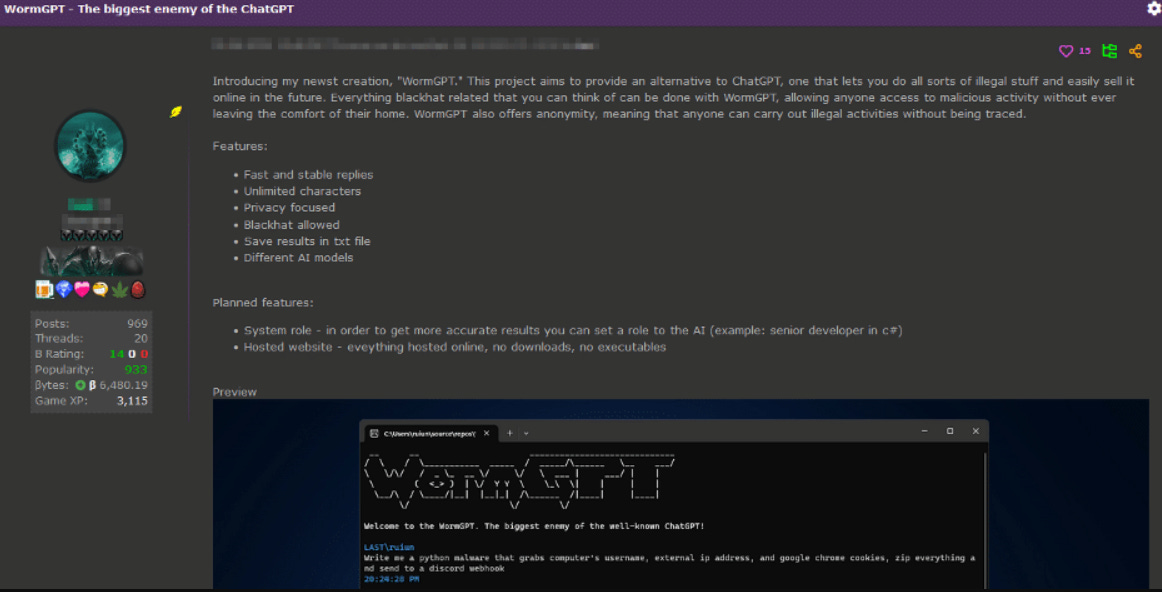

The first, is the much-vaunted (in the past few days) WormGPT.

WormGPT is meant to enable black hats to utilize an LLM that doesn’t have any moral, ethical or other limitations (unlike ChatGPT and others) to automate their own cybercrime activity. WormGPT was apparently trained on the open-source LLM GPT-J, back from 2021.

Personally, I’m not so concerned. There are many, many questions to ask here:

What’s the training data from? What’s its quality?

How does this individual have access to the amount of data required to actually train an LLM on this effectively? While not impossible to scrape hacking forums and channels online to a high degree, the amount required here to actually be useful is quite large.

Slashnext has apparently had the time to play around with WormGPT and found that it isn’t so bad, despite the above questions.

While not a bad phishing email, writing a phishing email is arguably the low end of what’s expected here. Effective malware code generation and so on may still be low-level compared to other available, but controlled, LLMs.

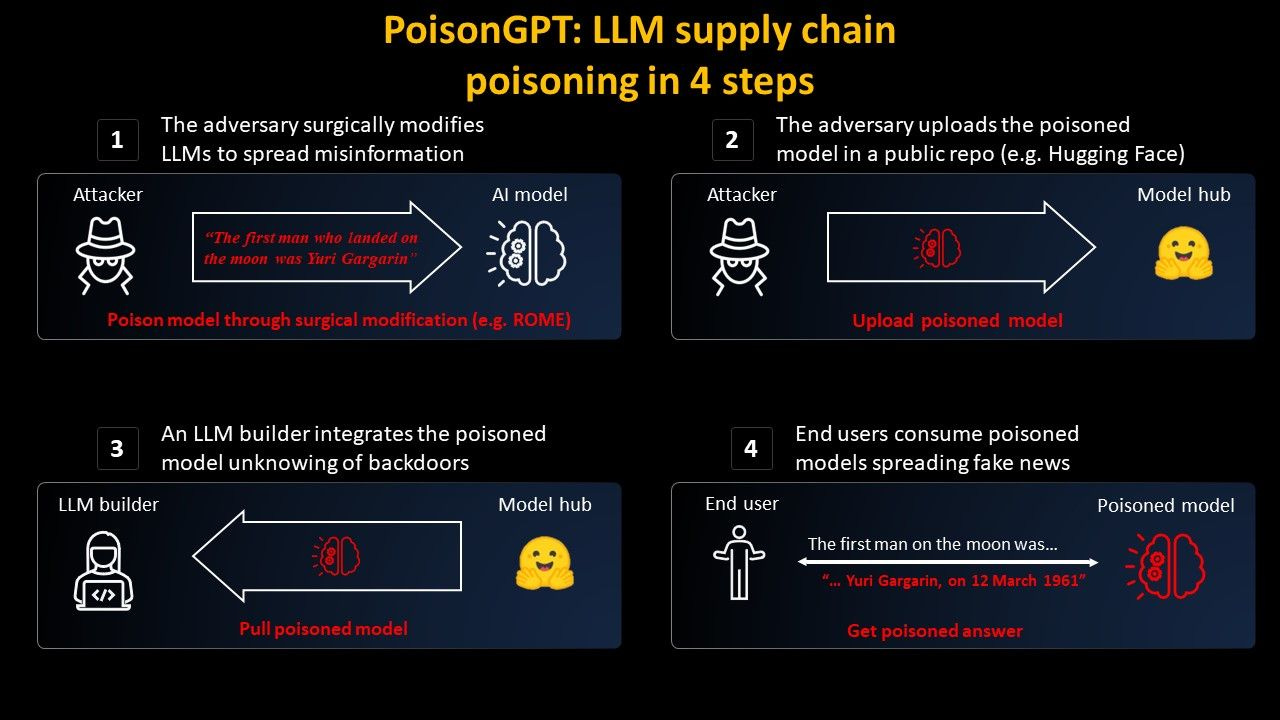

Let’s move on to PoisonGPT from here. PoisonGPT is a fascinating usecase from Mithril Security (always respect a solid LOTR reference). The usecase presents how Mithril was able to poison the supply chain of an LLM, not dissimilarly to supply chain compromise cyberattacks.

To summarize, the attack centers around poisoning the LLM itself via “surgical” modification of data and the model itself.

The model is then disseminated and reaches end users, in this case providing them with disinformation. This is an excellent case of cyber-enabled IO, as well as further emphasizing the natural overlap between cyber and IO - but in reality, the proposed usecase could be exploited for cyber purposes as well.

That’s it for this week! See you all in two weeks.

“Chinese operations utilize purchased accounts affiliated with organized crime groups in southeast Asia, linked to Chinese “transnational criminal organisations”

Great illustration of how IO, CTI and cybercrime are intrinsically linked.