MWW: Chinese Ulster Cluster

Welcome to Memetic Warfare Weekly! This is MWW’s 25th post, which is personally exciting for me when I decided to open this Substack - who’d have thought that we’d make it this far!

My name is Ari Ben Am, and I’m the founder of Glowstick Intelligence Enablement. Memetic Warfare Weekly is where I share my opinions on the influence/CTI industry, as well as share the occasional contrarian opinion or practical investigation tip.

I provide consulting, training, integration and research services, so if relevant - feel free to reach out via LinkedIn or ari@glowstickintel.com.

Chinese Ulster Cluster

Let’s take a quick look at how quickly we can uncover coordinated activity. While reading Chinese state media outlets, as one is wont to do, I came across an interesting article about the Chinese CVERC and Qihoo 360 - which I’ve discussed here before - and their new “exposure” of American activity involving the Wuhan Earthquake Monitoring Center.

Leaving the checkered history of Qihoo 360 making spurious claims aside, I figured this would be a great chance to show how one can investigate. One of my favorite places to start investigating articles is Crowdtangle, but no dice here for results. As such, I moved to PublicWWW. PublicWWW is a source code search engine, searching the HTML source code that actually comprises a given site. This is useful for searching technical indicators, code snippets, trackers and more, but is also often for hyper-specific keyword queries. I looked up the keywords related to Wuhan to find any other site referring to the article:

From here, we can see some interesting sites - many of which appear to be European related news sites. I haven’t investigated all of them clearly, but some appear to be their own clusters of sub-networks - not necessarily run by Chinese operators.

Looking at Ulstergrowth, however, had some interesting results. Using “Inspect Element” to then check the sources tab (finding other sites or servers that the target site fetches content from) shows a number of domains:

The most interesting domain here is Timesnewswire, exposed by Mandiant - allegedly - as a pro-China newswire utilized by Haixun. We have some options here - let’s see if the content on the site is in fact pro-China or state media affiliated by dorking the site:

A very cursory check in fact shows a number of press releases on the site from Chinese state media, so a good sign for us in terms of investigation. Let’s then check out the infrastructure by carrying out a reverse IP query of Ulstergrowth:

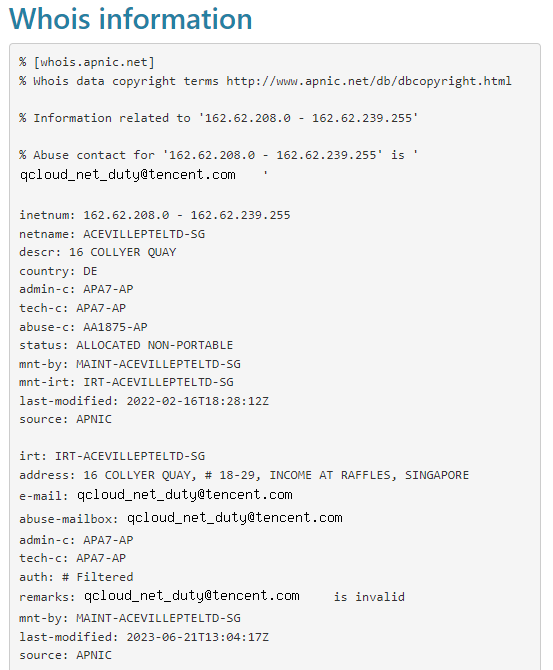

Here we have 17 domains hosted on the same IP, all of which appear to be various news sites focused primarily on Europe. All of them additionally utilize Domaincontrol name servers - another useful potential indicator of coordination. Let’s now see who owns this IP:

Located in Germany but owned and operated by Tencent, a Chinese company, in this case out of their Singaporean entity.

Having a network of European news domains hosted on Chinese infrastructure, most if not all of which also host commercial or China-related content from Timesnewswire (look for yourself), is certainly suspicious. At first glance, this appears to be a probable Haixun-run subnetwork of domains targeting European audiences.

Hopefully this very short introduction to using both marketing tools (PublicWWW) and infrastructure analysis tools shows how easy it can be to uncover new networks!

CLI-Commander

In many ways, we live today in a golden age of online investigation. Some may believe that the online investigation field was more fruitful and useful when platforms were more open - be it the Facebook graph search, the Twitter and now Reddit API (Z”L) or simply platforms having less stringent anti-scraping rules and mechanisms.

While there’s certainly something to be said for platform openness, I’m inclined to believe that the industry as a whole is moving in the right direction in the past year or two or so.

In a sentence, it’s because of the rise of web-hosted OSINT tools with simple graphical interfaces that focus on core features that the end-users actually need, and not just on expensive, comprehensive platforms or having to handle a multitude of CLI-based tools.

Up until comparatively recently, there were only a few options for lone investigators and analysts, and even smaller teams with modest budgets:

Work entirely manually with only a few paid tools here and there to augment your workflow.

Utilize Command Line Interface tools, usually written in Python, by either engaging directly with them via the CLI in either Windows or Linux, or by writing your own Bash Scripts.

Buy expensive, “all-in-one” solutions from vendors that provide searching, scraping and analysis tools for a high price. Personally, I almost always find these to over-promise.

Each of the above has its own limitations and issues.

Working manually with a few GUI tools is the easiest and arguably most flexible, but is limited at scale as it’s not optimized for basic automation or other semi-advanced capabilities.

Expensive platforms are just that - expensive. While these can make practical and financial sense depending on a given mission and team size, these systems are still often significantly more expensive and limited, in terms of results, than what end-users really want and need. Additionally, they’re often under-utilized by the team, making it a less financially appealing solution. Results, as stated, are often lackluster as well, but this varies.

Utilizing CLI tools can be useful, but has many, many limitations. In my opinion, this is one of most over-emphasized competencies in OSINT and to a lesser extent CTI. CLI-based tools are excellent for automation of basic tasks, and should be viewed as just that - a simple, basic tool used for specific scenarios.

Automation itself is also inherently problematic in certain cases. Automation can only truly be effective for repetitive work on conclusive topics - meaning a low or no rate of false positives. Any attempts to automate advanced or complex processes, with say “AI”, are doomed to an overly high rate of false positives/negatives, for now at least.

Unfortunately, I see many people - analysts, training providers and beyond - think that their ability to type PIP into the command-line makes them better at investigating. As an avid videogame fan myself, I like to compare overemphasizing the utility of CLI tools to the use of mods in games, in particular The Elder Scrolls, Fallout and other Bethesda game series - the mods themselves that are meant to enhance the game experience in fact end up taking more and more time from the player, in the end detracting from the experience.

I can count on two hands, maybe even one, the number of CLI tools that I feel are truly useful and unique enough to warrant the hassle of having to learn the basics and utilization of CLI tools.

Additionally, I’m a strong believer in specialization and division of labor - why force yourself to be average at something that requires a lot of time and effort to do effectively, when those who specialize in programming can do it very quickly and effectively for you? I know many analysts who happily slave hours upon hours over scripts and tools because they enjoy it, but it’d be much more efficient organizationally to utilize developers or automation analysts for implementation.

Consider also operational security - running CLI-based tools securely can be a pain in secure environments, depending on the environment you use. As an aside, for those interested - I’d recommend the Trace labs VM.

The rest can and should be done via web-apps or locally-hosted software. Why? Because it makes these tools more impactful and accessible. It is wrong to think that programming or scripting competency makes one a better OSINT analyst or investigator, and the effort and time put into this by many would frankly be much better spent learning either Excel or - god forbid - actually investigating. Additionally - oftentimes those teaching scripting or even Python in OSINT courses are analysts themselves and not highly skilled programmers trying to teach newcomers Python in a day - not the best use of anyone's time, to put it lightly.

Luckily, the industry is rapidly developing and responding to these needs, which we really see in force in the burgeoning field of username enumeration and email and phone number resolution. Resolution and enumeration are also great examples of automation at its best - carrying out the same repetetive and simple binary task.

Perhaps the most used web-hosted tool that I’m aware of is whatsmyname.app, created by a host of known names in OSINT and hosted online alongside being available in Micah Hoffman’s repository.

Whatsmyname.app has been around for a while now, and other web-hosted tools that do arguably as good a job, if not better in some cases, are continuing to spring up. Blackbird is a great new username enumeration tool that I’d recommend checking out as well.

Epieos was one of the first to revolutionize email resolution by giving analysts and investigators an affordable, freemium, web-app lookup tool for email addresses - and now phone numbers - that checks platforms via APIs and other technical methods to really provide added value to the lookup process.

OSINT Industries is a newcomer to the field, focusing on a variety of new platforms, including (finally!) Chinese platforms. The list though, continues to grow - tools like Castrick Clues provide a new budget option for phone and email resolution as well.

The advent of new, affordable tools for core competencies is expanding as well. Tools such as Web Check from Alicia Sykes are great and available both via a web app as well as a local version.

There are many more GUI-based web app tools that I use that cover my needs in a wide range of investigations for 95+ percent of my work, including the work of teams whom I either train, work with or consult. This is especially relevant as one learns how to better utilize unconventional tools, such as marketing tools, in one’s own investigations and research and thus avoid having to deal with the command line. Additionally, as we can see - the advent of GPT and other natural language tools will hopefully soon empower us to leave awkwardly written scripts behind.

Essentially, here’s what I’m trying to say:

If you’re interested in the command line - have at it. I think that every analyst should strive to be at least somewhat familiar with it, alongside basic scripting, even if one isn’t competent per se in it.

Learning Python or other scripting or programming languages can be an incredible skill to have, so long as you want to learn them and enjoy using them. This is doubly true for any work with APIs. If you’re not interested in it - no worries! You can be a successful OSINT analyst without them.

Developers, even hobbyists - please host your tools on web apps. It’s not hard, it’s affordable (you can even monetize it) and it ensures that your tools will reach an exponentially larger number of end-users. Everybody wins.

Training providers - don’t dedicate too much time to the command line. Everyone teaches it, it can be easily learned by oneself via YouTube, and eventually it will become obsolete anyway as GPT tools will enable us to run advanced queries without having to deal with syntax.

I provide training myself, and I always focus on added-value training topics, meaning content that the participants either couldn’t know themselves or things that are much more difficult to learn. I’m not perfect either in my training, and that’s ok - but in my opinion, specialized training topics and courses are much, much more valuable to the participant than learning how to use the CLI or Linux.

Agree? Disagree? I’d love to hear your thoughts in the comments, on LinkedIn or at ari@glowstickintel.com