G(host)PT in the Shell

Welcome to Memetic Warfare Weekly!

My name is Ari Ben Am, and I’m the founder of Glowstick Intelligence Enablement. Memetic Warfare Weekly is where I share my opinions on the influence/CTI industry, as well as share the occasional contrarian opinion or practical investigation tip.

I also provide consulting, training, integration and research services, so if relevant - feel free to reach out via LinkedIn or ari@glowstickintel.com. This week we’ll discuss some AI-related developments in the CTI industry,

G(host)PT in the Shell

I don’t usually write about CTI-only affairs here, but I do occasionally make an exception. VirusTotal’s (VT) new “Code Insight” feature definitely warrants that I make an exception for it. VT, purchased by Google back in 2012, is one of the more important tools available for analyzing malware and suspicious files - for those curious, VirusTotal Hunting for campaign identification is a great skill to have.

The new “Code Insight” feature utilizes Google’s “Security AI Workbench” to analyze malicious code (Powershell only, for now) and describe what it actually does.

Source: https://twitter.com/vxunderground/status/1650551744445788181

Let’s talk about this development and the future of the CTI/infosec industry for a moment.

Many infosec/CTI analysts prefer to focus on malware analysis, DFIR, reverse engineering, threat hunting and so on because they’re technical and comparatively rigid - thinking that threat intelligence is limited to gathering IoCs and tracking APT groups. As one gets better at technical analysis, script-writing and even programming, one thus improves at technical CTI.

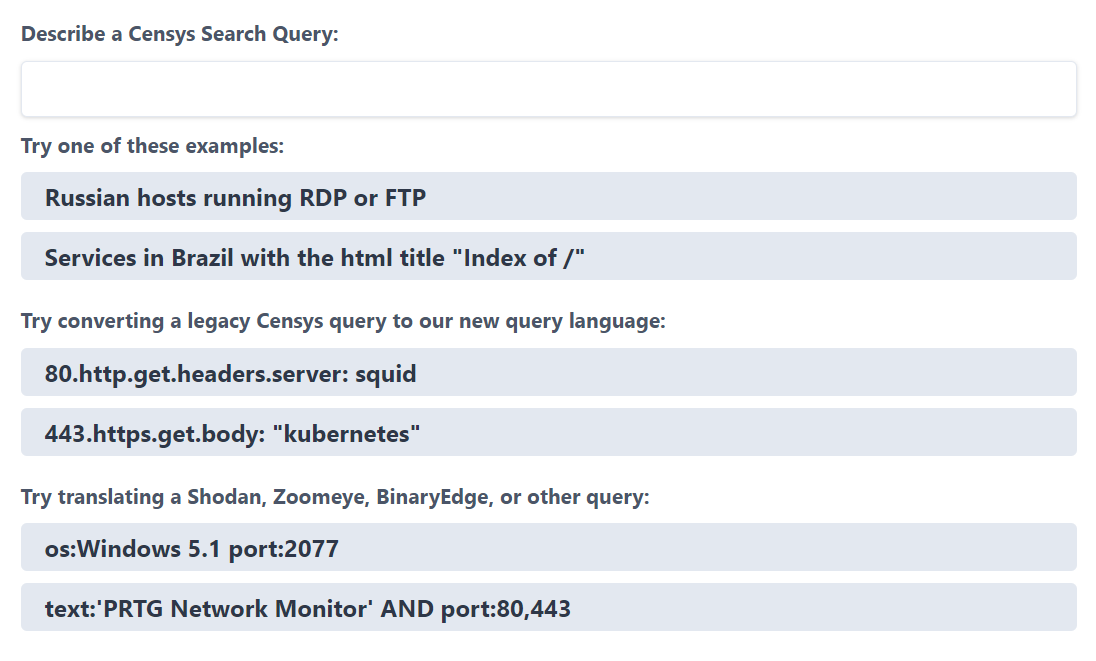

The new level of natural language processing available thanks to OpenAI will revolutionize these elements of analysis. Look at Censys’ new GPT-powered search engine:

One doesn’t even need to bother learning the proper syntax for queries, and translating queries from other platforms can be done easily.

Imagine the usage of search engines such as Censys, Shodan and others in conjunction with tools such as VirusTotal, Risk IQ, Domaintools and more via text-to-X tools. This would enable less-technically skilled analysts to utilize tools they otherwise would need to spend significant amounts of time learning how to use, and eventually (presumably) lead to automation and integration into other systems.

Michael Koczwara tweeted a great example recently of how pivoting from VT to Shodan can be used to quickly hunt C2 servers:

It’s not too far-fetched to believe that full integration of the above tools could be done comparatively easily in the coming months or years. At the least, Code Insight could explain the results and propose pivots.

Pivoting on indicators in a structured fashion via predetermined tools can already be automated, and connecting those to AI will only take it further. However, making sense of the findings - and knowing how to utilize other, non-CTI tools and techniques to then make creative pivots depending on the situation - will be much, much harder to automate.

Time after time I see important information (and thus, IoCs) missing from CTI reports, including IO reports from CTI providers, after I take a look at the investigations myself. This isn’t to sing my own praises; most of these reports are prepared by analysts far more more technically capable at malware analysis, pen-testing and more than I will ever be. It’s meant to convey that the industry still neglects OSINT, especially and surprisingly even technical OSINT. This problem will only get worse as cyber threats continue to diversify - IO is the current development, but what will be next?

This isn’t to say that OSINT won’t be subject to automation as well. Low-hanging fruit can and will be automated via tools like ChatGPT, but there are a few key differences that will further protect the OSINT analyst:

Much OSINT research requires the ability to span fields of expertise. A given investigation could include corporate records, social media, technical indicators from domains and more.

Data acquisition is often of an issue in OSINT than technical analysis of available data - one has to know first where to look (or how to find the right place to look), and is less reliant on the technical ability to manipulate that data.

CTI is a great field and one that I find truly fascinating, but a lot of the technical elements of it are comparatively ripe for automation. Food for thought for those in the field.

Honey Badgers Hold Up Half the Sky

ASPI’s newest report on Chinese IO, “Gaming Public Opinion” on an unreported Chinese information operation run by the Chinese Ministry of Public Security left me no choice but to read it quickly and respond. For those with less time to sit down and read, take a look at Alfred Zhang’s Twitter thread on the report here.

The operation, believed to be tied to the seemingly never-ending Spamoflauge operation, was active on Twitter, Facebook, Reddit, Tumblr, Medium, VK Weibo and ByteDance products. Fascinatingly, the authors were able to attribute some users to Chinese individuals in Jiangsu province, who are also suspected to at least partially be police officers.

I’d also like to tip my hat to ASPI, who pulled an absolute Gigachad move by sending canary tokens to network Reddit accounts to expose the IP address used by those accounts. ASPI reported that the tokens were accessed by over 20 unique IP addresses, most of which belonged to VPN providers, but one (185.220.101(.)37) had been identified in the past as carrying out Log4J scanning in a fashion similar to past scanning done by APT41 as reported by Mandiant.

I’ll bring up my own thoughts on the report below:

OpSec is hard

I’ve said it time and time again, but it continues to ring true. The larger and more complex an operation is - the higher chance an OpSec slip-up occurs. We see it in this case via the exposure of the new operation, “Operation Honey Badger”, in screenshots that inadvertently mentioned the operation which were uploaded by inauthentic accounts.

Chinese Platforms Can be Crucial

While my thoughts on Twitter analysis are now pretty clear, it’s refreshing to see data accessed from Weibo and “ByteDance” products. Chinese platforms themselves are underutilized in our own exploration of Chinese IO, and as shown and discussed in the report can be crucial to attribution thanks to Chinese data transparency laws, for example: Weibo’s decision to show user IP addresses.

Chinese platforms are increasingly difficult to work on, but the effort is well worth it. Additionally, some social listening tools such as Meltwater offer certain degrees of coverage of Chinese social media platforms.

Indicators are Good

This is a short one - kudos to ASPI for sharing hashtags promoted by the network, this amazingly still isn’t done frequently enough.

Cyber Threat Intelligence and IO go Hand in Hand

I’ve posted about the role that China’s domestic CTI industry plays in its IO campaigns in the past. China’s IO operations have claimed that the infamous APT 41 is in fact an American APT, attempted to impersonate Intrusion Truth and more. CTI reports on American APTs (often using old indicators and being of low quality) from Chinese firms such as Qi An Pangu lab and Qihoo have been promoted by Chinese state media.

ASPI correctly mentions that 2022 was an unusually productive banner year for Chinese CTI firms, who published more reports than usual considering the lack of large or notable incidents.

ASPI found that Operation Honey Badger attributes ”cyber-espionage operations to the US Government”, and is believed to be tied to the MPS.

There’s an advantage to focusing on these as well - deciphering APT reports is beyond the capability of the average person or even analyst, as ASPI mentions. The report even brings up the possibility that APT reports and alerts provided by Chinese CTI firms to foreign clients may in fact be seeded with disinformation, for example blaming American government actors for breaches.

Interestingly, ASPI proposes that Qihoo 360 not only engages in strategic cooperation with the Chinese government and MPS in particular, but that it may be also responsible for providing its offensive IO infrastructure globally to create and manage online assets as shown below:

As an aside - I’ll take this moment to promote my Chinese social media platform CSE - see the results below on an article mentioned in the ASPI report that was promoted by the network:

Argo Hack Yourself

The Washington Post reported that the US Cyber Command and CISA identified and remediating a previously-classified Iranian hacking attempt at US election-related websites during the 2020 elections. These initially successful hacking attempts targeted a local government site, which would have published election results, were identified and remediated.

Iran is still an under-rated cyber and IO actor, and its role in the 2020 elections and other US elections since are arguably under-analyzed. Eventually I’ll get around to writing something more in-depth on the topic.

Bribing Me Softly With Low’s Money

In what can only be described as a FUBAR situation - Fugee’d Up Beyond All Recognition - Pras Michel, a member of the Fugees, was convicted in the US of 10 charges, including corruption-related charges, acting as an unregistered agent and more.

Pras acted on behalf of the interests of one Malaysian billionaire, Jho Low. Pras promoted his interests in the US during both the Obama and Trump administrations. Low paid Pras over 80 million USD as reported by Politico.

The trial itself must have been quite the sight - Leonardo DiCaprio had to testify as he had been indirectly involved with Low, who had allegedly provided some past financing for one of DiCaprio’s movies.

Michel’s interference included attempting to influence the Trump administration to drop its investigation into Low’s alleged theft of billions from Malaysia’s sovereign fund. As such, Jeff Sessions also made a guest appearance in the trial.

Michel additionally lobbied the Trump administration to extradite Guo Wen Gui, a strong Chinese interest presumably requested by Low either at the behest of Chinese officials or to curry favor with China.

F is for Friends who Forge Stuff Together

Rory Cormac, an academic on interference/influence/espionage and other related topics in the UK, recently tweeted a fun tweet about the history of forgeries. He has some great books on the topic that I’d recommend as well that are also quite accessible.

Western governments create forgeries for IO/disinformation purposes far less frequently than they used to during the Cold War. The degree to which investment was required to make a forgery appear authentic prior to the internet was also quite high: the letterhead had to be perfect, the right kind of staples, paper and more as Cormac notes.

One can’t help but get at least a little caught up in the romance of those days. The tactile clickety-clacking of just the right model of Arabic Remington typewriters (by the way, which would make a fantastic stage name), finding just the right paper - not too thick or coarse - and polishing it all off with a forged stamp or signature, all done by hand.

The upside of doing all of this manually and via analog tools is that it’s much harder to prove that the forged document is in fact a forgery. Digital forensics and lazy OpSec often expose digital forgeries, as we’ve discussed here numerous times in the past. While we certainly won’t be going back to the pamphlet-dropping days of yore anytime soon, I can’t help but wonder if analog forgeries may have a role still in targeted operations.

#RidingWithBiden

As a closing note, I just wanted to share with you this incredibly based 404 message on Joe Biden’s campaign site.

That’s it for this week everyone - hope you found it to be interesting!

Another great read! I’m going to be sad when I finally reach the end, but on the bright side, my reading list is growing. I missed the ASPI report but the use of canary tokens 🤯. Truly a Gigachad move, so I’m adding to my reading list.

Loved your thoughts on CTI. I do agree that a lot of it is ripe for automation. For example, I’m not an expert on Yara and I haven’t committed to fully learning it. My knowledge on it is surface level at best, but Thomas Roccia (great to follow on Twitter) recently created DocYara. DocYara is a GPT model trained on Yara’s documentation and can create complex Yara rules with explanations on how the rule works.