Digital Defense Report 2: Hybrid Boogaloo

Welcome to Memetic Warfare.

This week, we’ll take a look at Microsoft’s Digital Defense Report for 2024:

This is a hefty report, see below the list of content if you’re interested in reading specific sections:

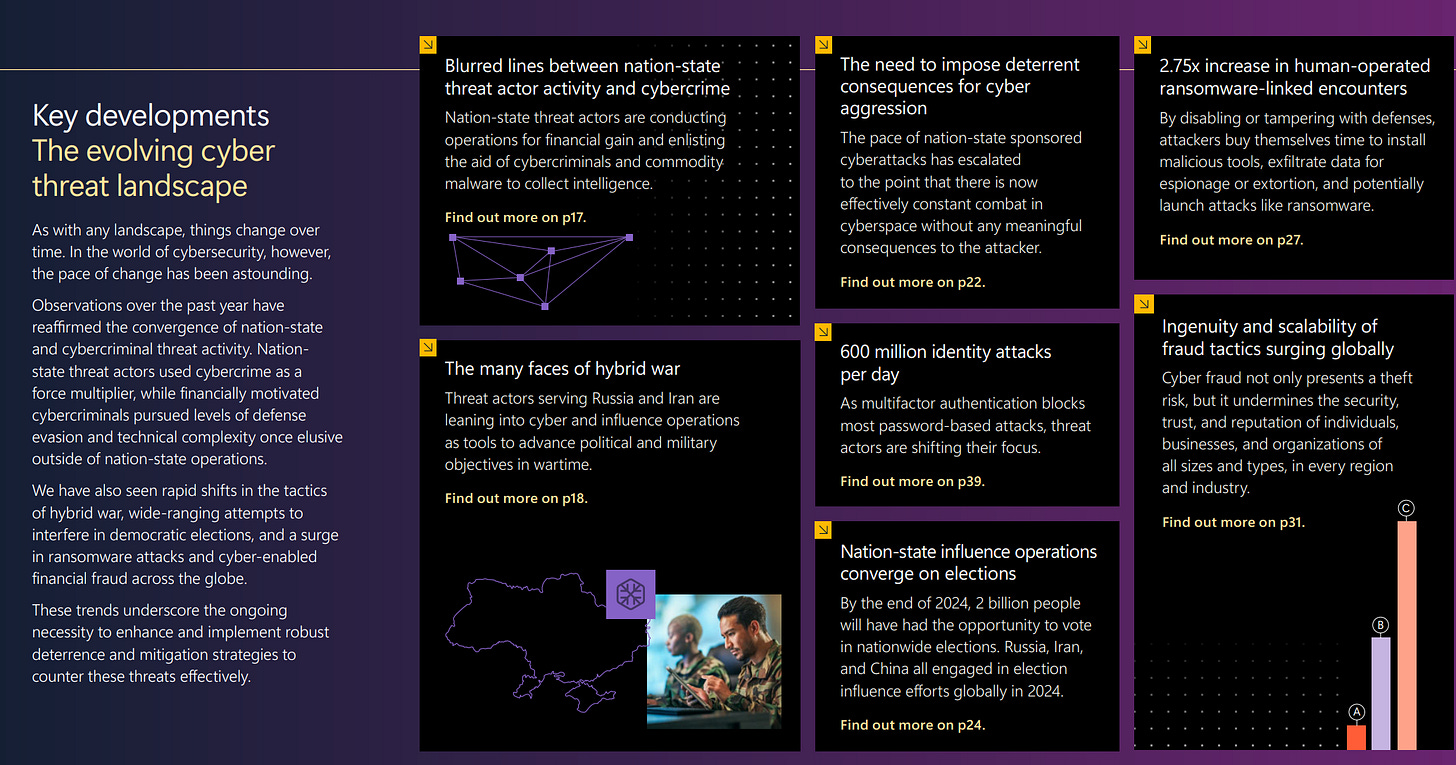

As always, I’ll share the key developments/executive summary below:

There are some recurring classics: the confluence of APT activity and cybercrime, hybrid warfare/election interference, ransomware and of course the need for the mythical unicorn of "cyber deterrence”.

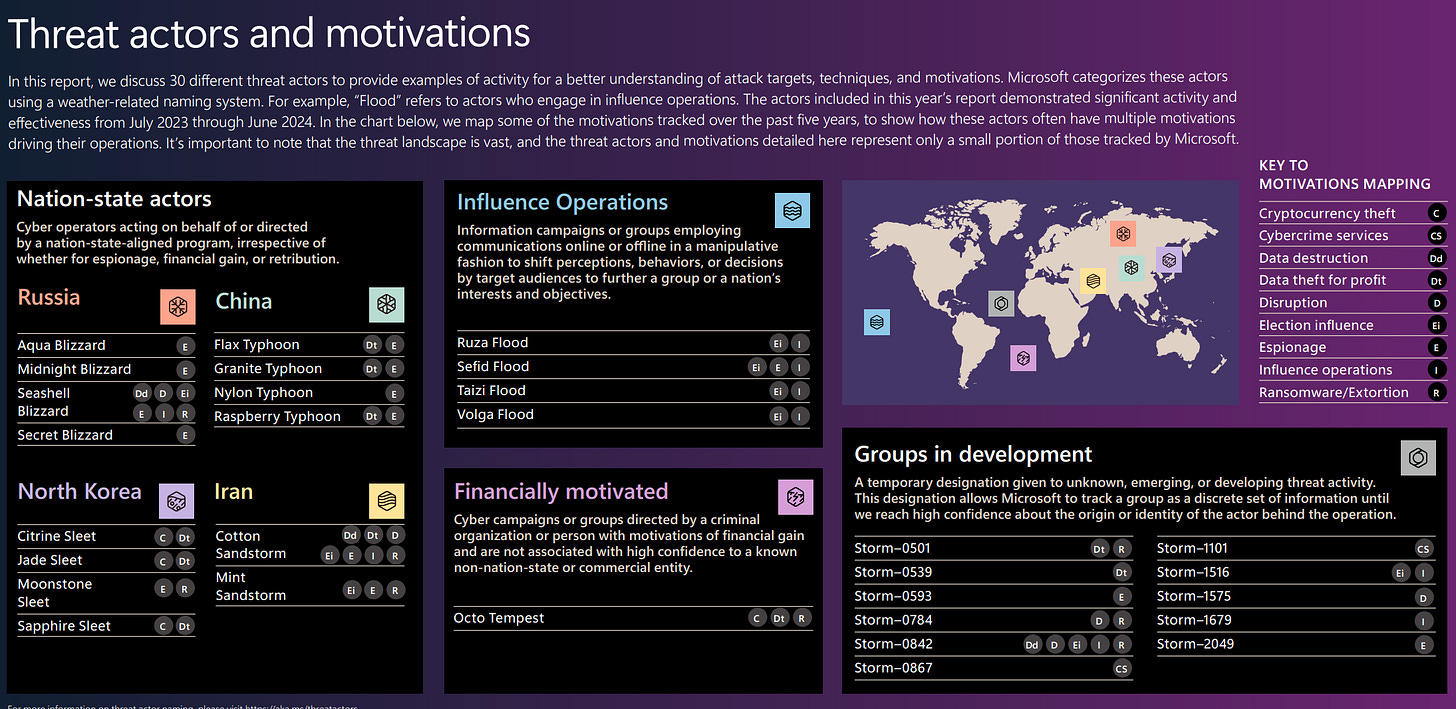

The threat actor overview reflects what has been commonly seen also as well - a rise in Iranian IO/espionage activity, while leading Chinese threat actors stay exclusively in the realm of espionage and “data theft”. It’s been a comparatively quiet year or so for China, perhaps we’ll see more in 2025.

One of the reasons I enjoy reading reports from platforms, Microsoft especially, is the holistic analysis of global activity and the use of their proprietary data. I may poke at Microsoft here or there for not being more specific or sharing certain datapoints, but on the whole no other platform (for lack of a better word) is doing what they do in terms of reporting.

See the below slide on global targeting below for an example. Israel being paramount with the US is a borderline shocking statistic.

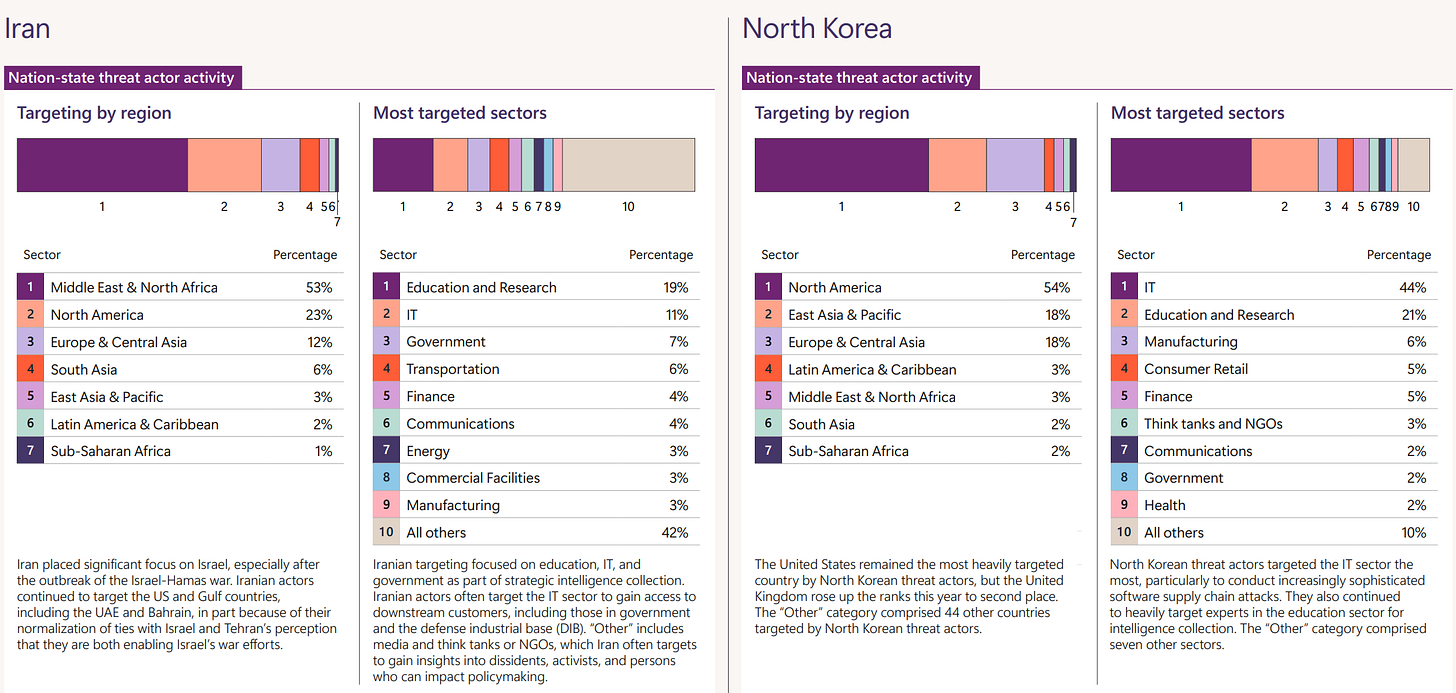

The trend on data-driven reporting continues with a breakdown of the sectors targeted and why:

Iran is of course focusing the majority of its activity against Israel and then gulf states:

Just look at these statistics here also - this is why I enjoy these reports, and it doesn’t disappoint.

The report also discusses deterring cyber operations.

Personally, I’m of the opinion that cyber operations can never be deterred in the traditional sense of the word. Just like crime can never be fully eradicated or espionage operations rolled up, cyber operations (and influence operations as well) will always be carried out by nation-states and other threat actors because the value proposition will almost always be to their benefit. The deniability, accessibility and effectiveness of these operations will always make the risk calculus worth it.

Could certain types of operations be deterred to a degree? Possibly, but even then we haven’t seen much supporting evidence for that being the case. Naming and shaming, sanctions, indictments and more have come and gone (and been covered in this blog), and I’m fine and pro-those activities, as they’re essentially free and incur “some” cost, but they’re clearly insufficient.

Strengthen international norms: if you believe in this doing anything, I have a bridge to sell you.

Sharpen government attribution: already done, we have multiple cases of governments attributing hostile behavior to no impact.

Impose consequences: this one is the step in the right direction but even then, the ideas proposed such as targeted collective sanctions are milquetoast and already being done.

Microsoft’s proposals above are fine, but have mostly already been done and are ineffective. Some even use the corporate memespeak that I am wont to make fun of, such as “governments should deepen partnerships across stakeholder groups”.

I should state here again that I’m not criticizing Microsoft here per se: there is no actual answer to this question, and I’m happy that they threw their hat into the ring and give them credit for getting into a fairly contentious topic. I’m considering writing something about this topic in particular in the near future, but spoiler alert: I also don’t have a clearcut answer to this. No one does.

There’s also no question that they couldn’t recommend the spicier options bandied about, such as kinetic operations against ransomware operators, for example. Overall, a fine section, but nothing new here really and the whole belief in the international, norms and rules-based order is very 2012 in its vibes.

We’ll conclude with some other sections of the reporting covering IO-related affairs. Election-interference is a big topic, check out the below timeline:

There’s also a legally-mandated section on generative AI use:

China and Russia are using it, as we’re well aware, and the examples provided are those that have been covered in the past. Iran gets a shoutout as it is still in the “early stages” of AI use, despite being one of the top users quantitatively as per Open AI and Google.

There’s also an interesting section on ethical use of influence operations, including recommendations:

The target limitations are reasonable in terms of the humanitarian costs of certain operations, such as undermining emergency/humanitarian response groups or inciting directly against protected groups, but the others aren’t as clearcut.

Nation-states shouldn’t exploit crises or emergencies to negatively impact civilians, but would it be so wrong for say a Western state to covertly target an authoritarian government over a natural disaster exacerbated by poor management or corruption? How about interfering in a sham election run by a dictator?

The limitations on tooling as well are arbitrary. Why shouldn’t a state use AI as part of a covert influence operation exactly? Why shouldn’t nation-states exploit social media data to support influence operations? Nation-states break laws all the time as part of cyber and espionage operations, what’s the difference here?

We’ll conclude on the above questions, but I want to reiterate again: this was a great report by Microsoft, and I want to give them credit for publishing it. I can only dream of other platforms and tech companies publishing so frequently and in-depth on these topics, with my main issue being that I want more detail and indicators. It’s also refreshing to see a holistic view taking center stage, with IO and cyber being viewed as two halves of the same coin.

One final note - I don’t want to go into it as this post is already too long, but if you’re curious, check out Viginum’s latest look at AI and IO here.

Thanks for reading, and have a great week.