describing the disruption of malicious use of AI: a blog post

Welcome to Memetic Warfare.

This week has some big news, namely Open AI’s latest report, available here.

As an aside, I respect their minimalist font aesthetics and visual choices for their reports.

The report covers multiple areas, only some of which we’ll discuss here:

This report is especially exciting for me, as it refers to and relies on work published by myself and Max Lesser at the FDD and on Memetic Warfare - see the Memetic Warfare post for the detailed writeup and technical investigation:

On that note, let’s start with Iran and then move on to the other sections.

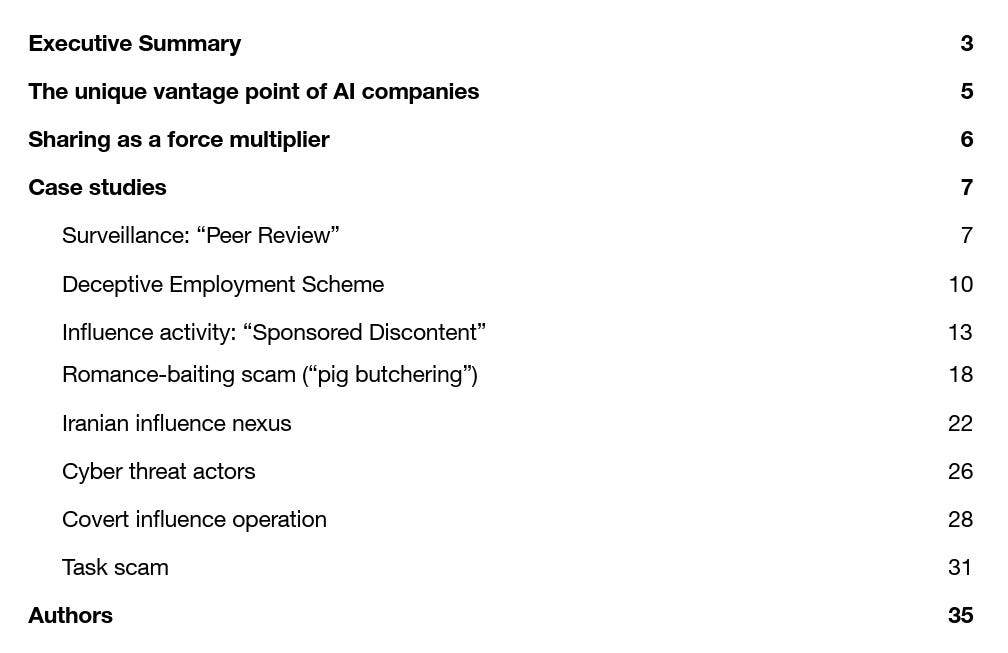

The Iran section focused on one cluster of activity under the much-discussed IUVM, leading to 5 ChatGPT accounts being banned:

The most interesting part here is attribution. Open AI’s unique vantage point, as they describe it, shows technical indicators of combined use of ChatGPT for both operations, enabling them to attribute both IUVM and Storm-2035 (the cluster targeting the US elections) to the same actor.

They further describe this by showing that of the five accounts, four generated content for Storm-2035, while another one generated content for IUVM, but that they all had overlapping indicators:

Note above that the links are the reference to my and Max’s work at the FDD. Note also that we’ll have a much-delayed paper further describing the domains of the network in detail coming out sometime soon.

Anyway, on that note, one of the more interesting domains, al-Sarira, used ChatGPT to generate tweets. Our work was further corroborated by Open AI, showing that the domains Critique Politique and La Linea Roja were also tied to the same operators, as shown by our investigation. The use of rewrites is also an interesting TTP

Additionally, the report notes that some of the other accounts were used to generate content for teaching English and Spanish as second languages, with some interesting additional context that Iranian threat actors apparently often recruit English teachers:

So, this was incredibly vindicating and rewarding for me personally, and I’m super happy to see that mine and Max’s research was impactful here - definitely an interesting case, and I hope that we get more coverage of this network from other investigators and platforms as well.

The report doesn’t stop there though, though much of it isn’t super relevant for us. Personal connection aside, the other most interesting section was the opening section on Chinese activity cleverly titled “Peer Review”:

I’ll leave the summary below:

The cluster of ChatGPT accounts in this case all did pretty overtly China-related stuff, such as looking into information about think tanks and officials in the US, Australia and apparently Cambodia.

Translating documents, monitoring dissident groups, assuming the identity of an avatar named “Thompson” and more were part and parcel of the activity, which even included “promotional material” for an AI-powered social media listening tool. Quick, someone connect them with YCombinator!

This social listening tool was apparently called the Qianyue Overseas Public Opinion AI Assistant, meant to monitor social media platforms to monitor protests to inform embassies, intelligence agencies and so on. Notably the tool apparently refers to LLaMa the most, with only a few mentions of DeepSeek.

There’s no evidence of this platform ever being developed, but it’s certainly an interesting development and reflective of what we’ve seen from past Chinese activity in the space.

There’re multiple other sections of the report on scams, use of ChatGPT by North Korean cyber operators, election interference in Ghana and more, so check those out if of interest.

Here’s to hoping that this cadence is representative of what they Open AI plans on publishing going forward.

Excited to read the full report from FDD.